🖥️ A Thinking Computer? Scientists Can’t Agree 🤔

📣 Got something to feature in our newsletter? ✉️ Email us at editor@aihistory.news, and we’ll take it from there! 🚀

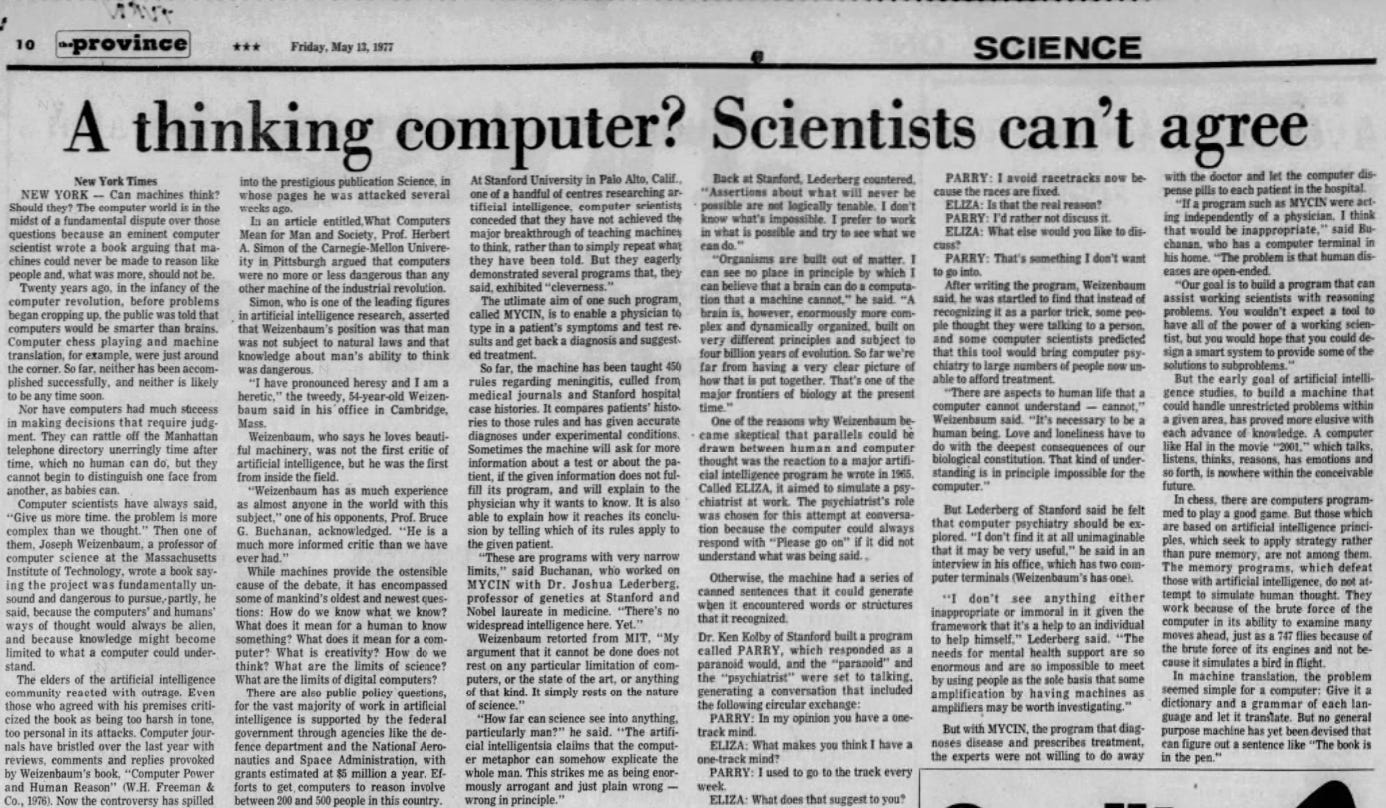

Weizenbaum had a primary concern that centered on the ethical implications and limitations of AI. He argued that while machines could simulate certain aspects of human thought, they fundamentally lacked the ability to replicate human intelligence in its true form. This distinction was critical for Weizenbaum, who believed that conflating machine processing with human cognition risked oversimplifying the complexities of human thought and behavior.

One of his key objections was to the notion that machines could replace human decision-making in areas requiring moral judgment. Weizenbaum noted that such a belief ignored the profound differences between computational logic and human reasoning. For example, while AI might excel in tasks like diagnosing diseases or playing chess, these capabilities did not equate to understanding or consciousness. He argued that AI systems lacked the emotional and ethical dimensions intrinsic to human decision-making.

Weizenbaum was also critical of the tendency to anthropomorphize machines, attributing human-like qualities to algorithms. He warned that such thinking could lead to unrealistic expectations of AI, potentially creating societal harm by fostering dependency on systems that were inherently limited. This critique extended to the development of programs like MYCIN, designed to assist physicians in diagnosing medical conditions. While acknowledging the utility of such systems, Weizenbaum cautioned against viewing them as replacements for human expertise.

Weizenbaum’s reflections remain relevant today, as advances in AI continue to raise questions about the relationship between humans and machines. His insistence on recognizing the limits of AI and prioritizing ethical considerations serves as a reminder that technological progress must be guided by thoughtful reflection and a commitment to the common good.